An important challenge when using computer vision models in the real world is to evaluate their performance in potential out-of-distribution (OOD) scenarios.

While simple synthetic corruptions are commonly applied to test OOD robustness, they mostly do not capture nuisance shifts that occur in the real world.

Recently, diffusion models have been applied to generate realistic images for benchmarking, but they are restricted to binary nuisance shifts.

In this work, we introduce CNS-Bench, a Continuous Nuisance Shift Benchmark to quantify OOD robustness of image classifiers for continuous and realistic generative nuisance shifts.

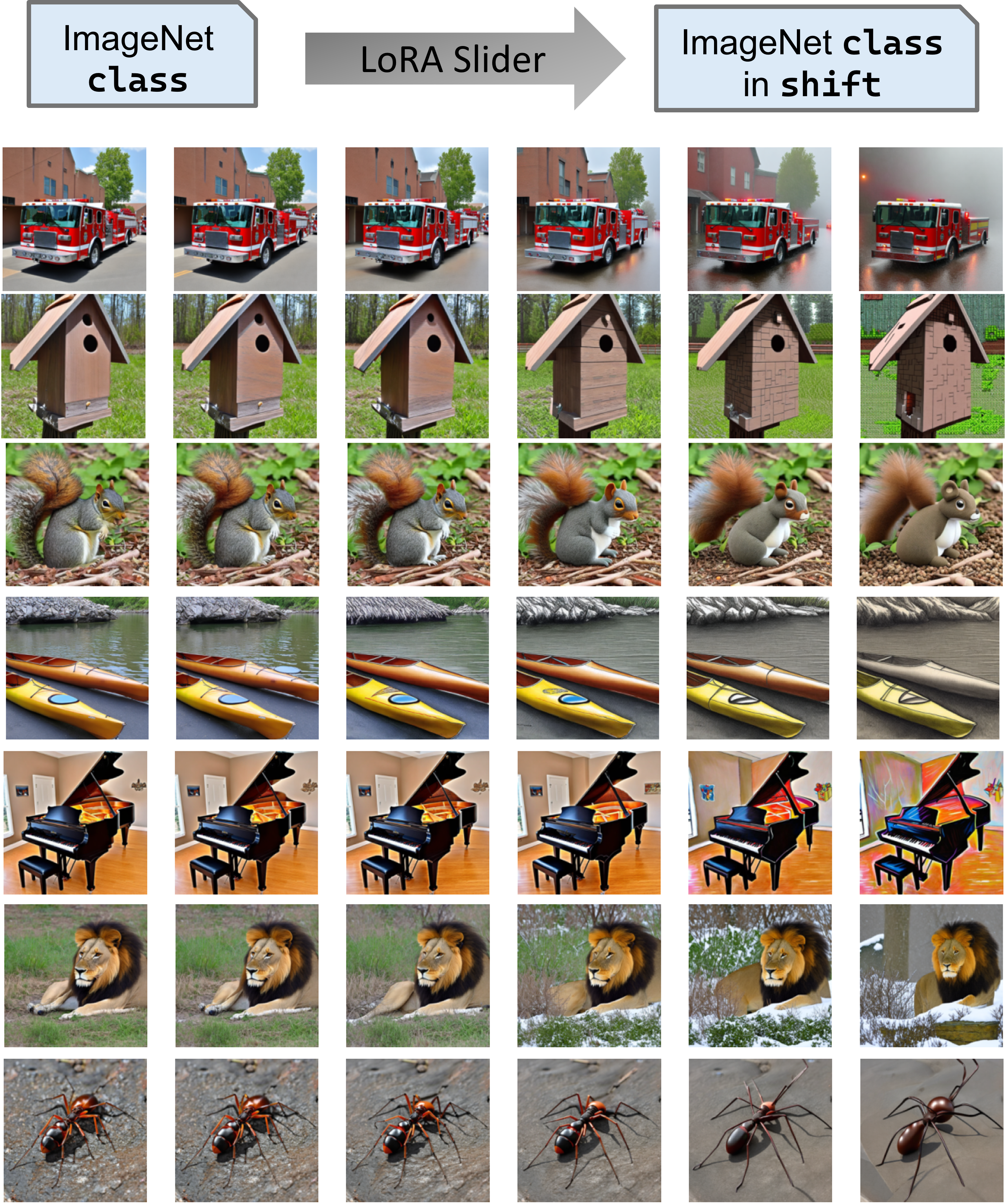

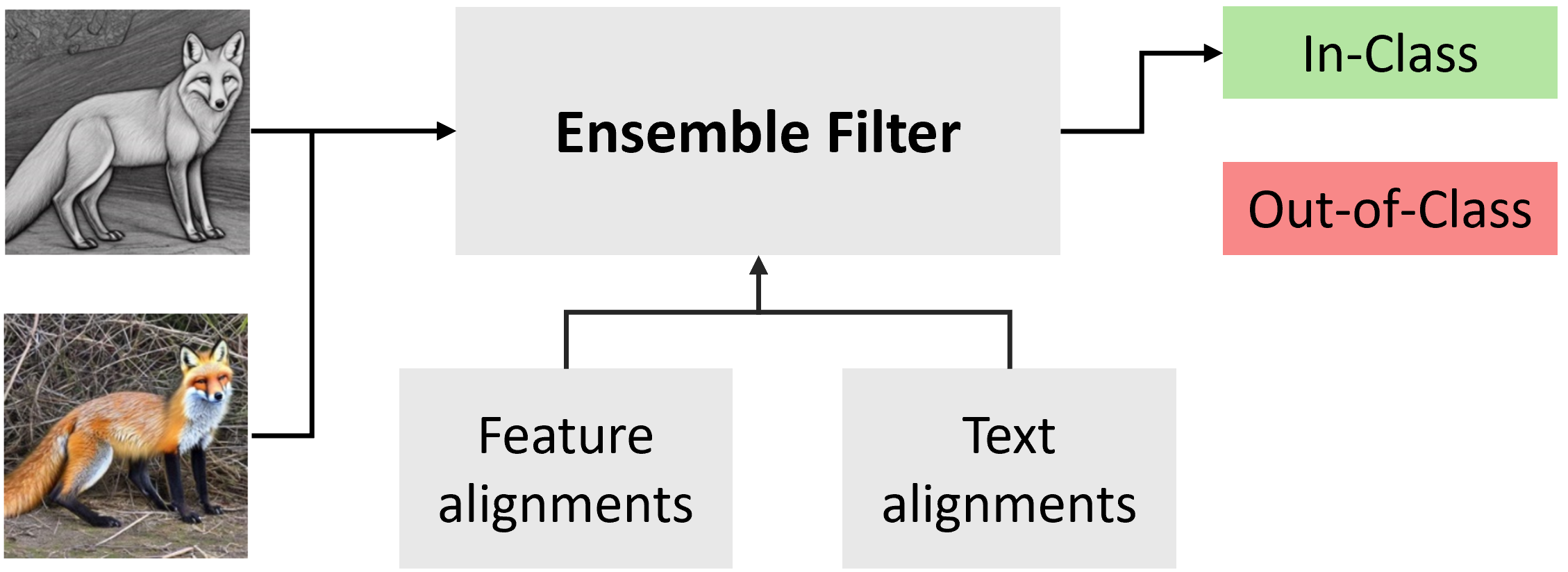

CNS-Bench allows generating a wide range of individual nuisance shifts in continuous severities by applying LoRA adapters to diffusion models. To remove failure cases, we propose a filtering mechanism that outperforms previous methods and hence enables a reliable benchmarking with generative models.

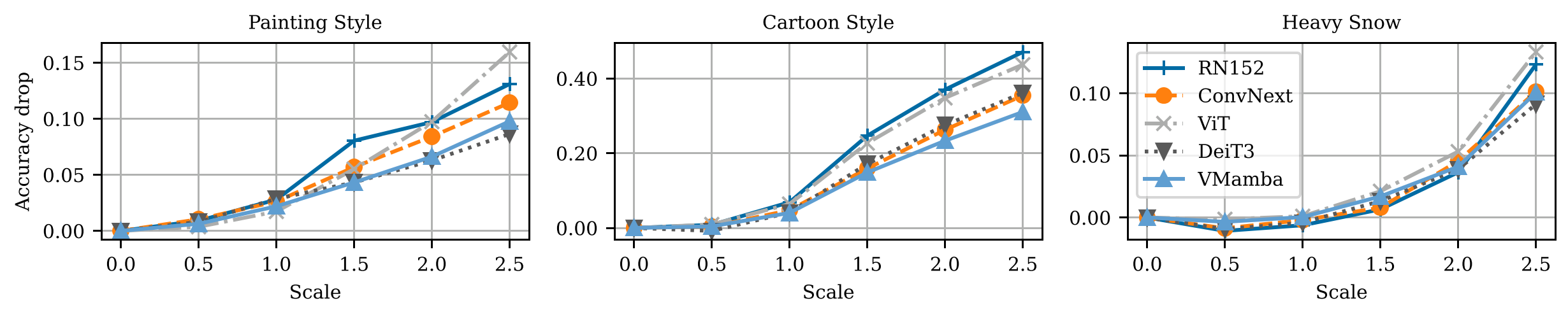

With the proposed benchmark, we perform a large-scale study to evaluate the robustness of more than 40 classifiers under various nuisance shifts.

Through carefully designed comparisons and analyses, we find that model rankings can change for varying shifts and shift scales, which cannot be captured when applying common binary shifts.

Additionally, we show that evaluating the model performance on a continuous scale allows the identification of model failure points, providing a more nuanced understanding of model robustness.