|

|

| DIY-SC finds semantic correspondences for extreme appearance and shape changes. | Feature refinement with filtered pseudo-labels brings significant improvements. |

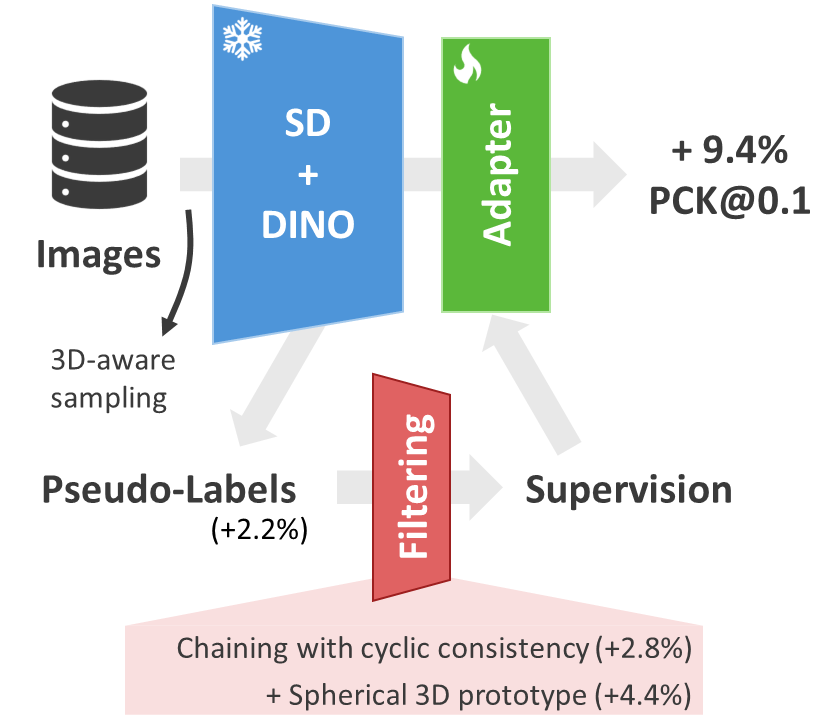

Finding correspondences between semantically similar points across images and object instances is one of the everlasting challenges in computer vision. While large pre-trained vision models have recently been demonstrated as effective priors for semantic matching, they still suffer from ambiguities for symmetric objects or repeated object parts. We propose to improve semantic correspondence estimation via 3D-aware pseudo-labeling. Specifically, we train an adapter to refine off-the-shelf features with pseudo-labels obtained via 3D-aware chaining, filtering wrong labels through relaxed cyclic consistency, and 3D spherical prototype mapping constraints. While reducing the need for dataset specific annotations compared to prior work, we set a new state-of-the-art on SPair-71k by over 4% absolute gain and by over 7% against methods with similar supervision requirements. The generality of our proposed approach simplifies extension of training to other data sources, which we demonstrate in our experiments.

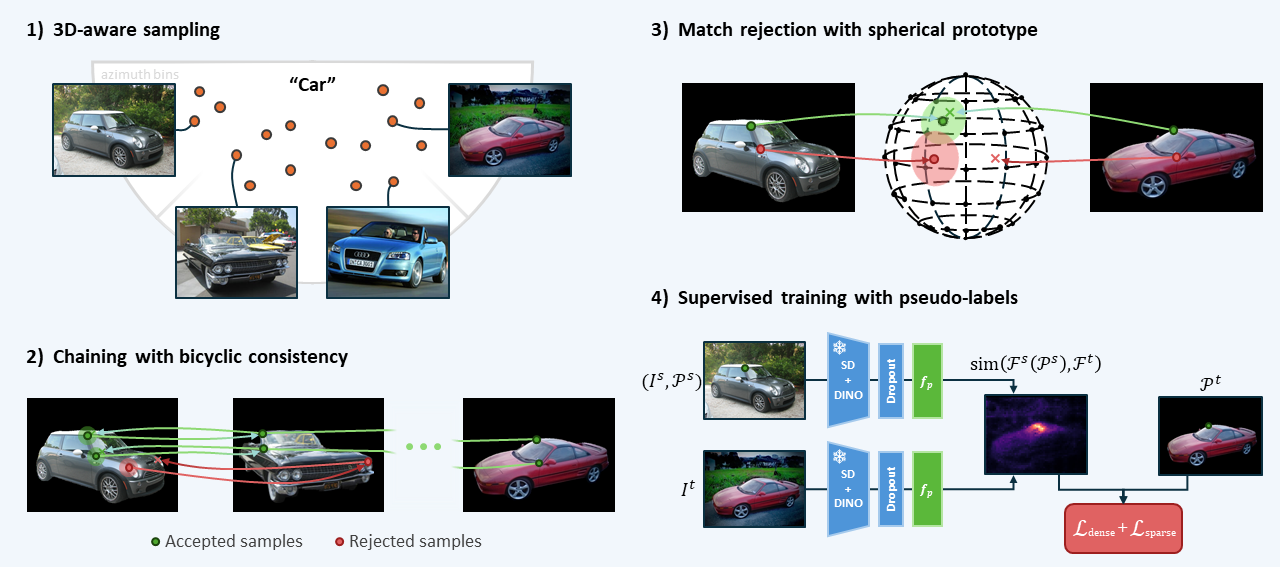

Method overview. We use azimuth information to sample image pairs for which higher zero-shot performance can be expected (1). We then chain the pairwise predictions to get correspondences for larger viewpoint changes, where we reject matches that do not fulfill a relaxed cyclic consistency constraint (2). We further filter pseudo-labels by rejecting pairs that cannot be mapped to a similar location on a 3D spherical prototype (3). Finally, we use the resulting pseudo-labels to train an adapter in a supervised manner (4).

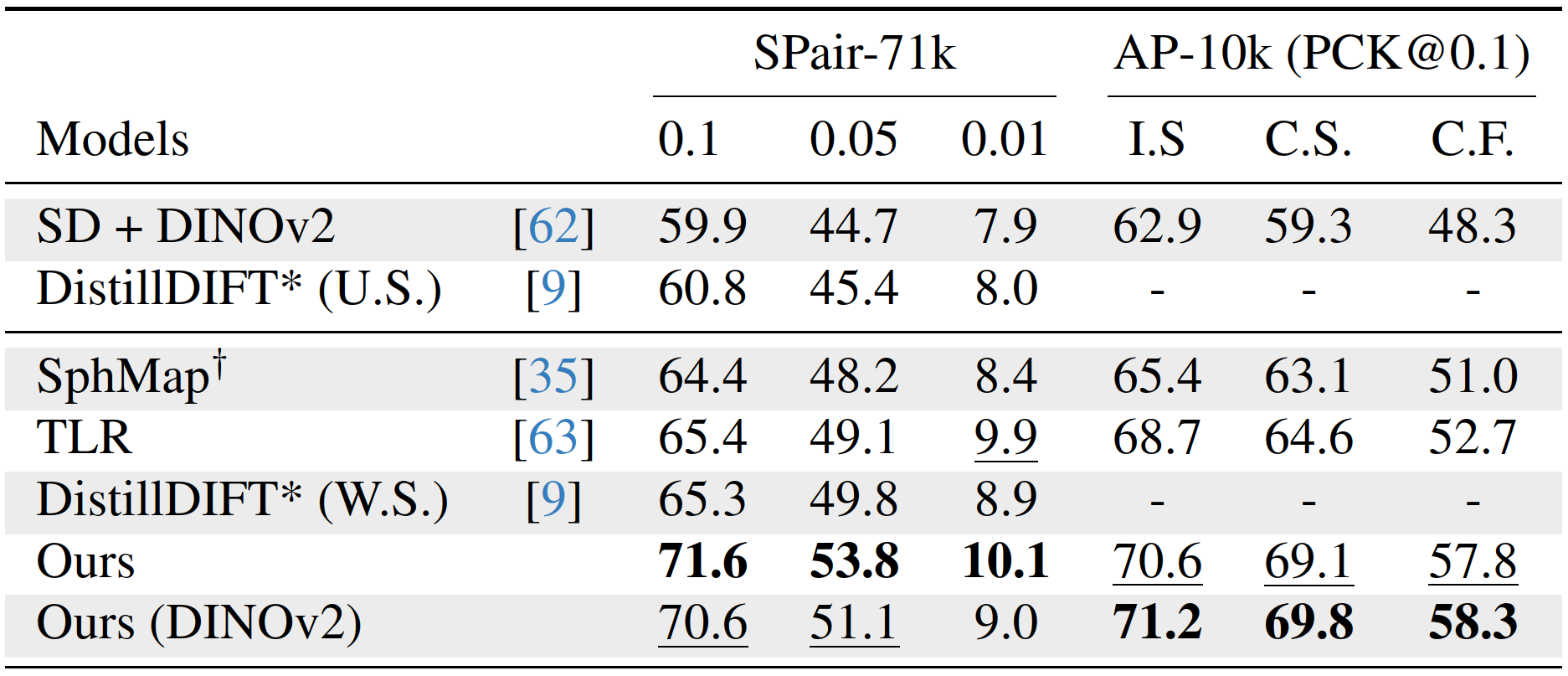

Results for different PCK levels (per-image) on the SPair-71k and the AP-10k dataset.

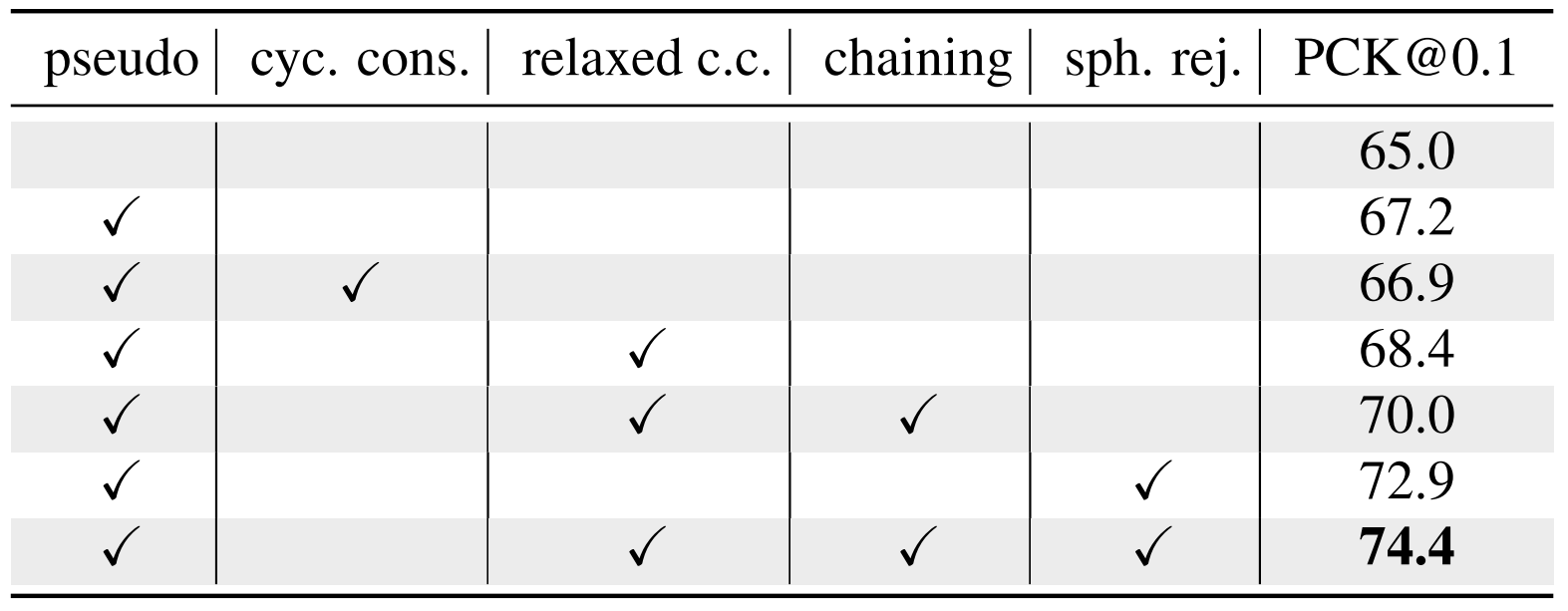

Ablations on SPair-71k.

UMAP feature visualization. The features encode 3D-aware semantic information.

Exemplary tracking. Refining DINOv2 features with DIY-SC adapter results in more stable tracking.

@article{duenkel2025diysc,

title = {Do It Yourself: Learning Semantic Correspondence from Pseudo-Labels},

author = {D{\"u}nkel, Olaf and Wimmer, Thomas and Theobalt, Christian and Rupprecht, Christian and Kortylewski, Adam},

journal = {arXiv preprint arXiv:2506.05312},

year = {2025}

}